Welcome to our guide on audio compression! As avid audio engineers, we understand the importance of achieving optimal sound quality. In this article, we will explore the role of an audio compressor in audio engineering and production. We will explain what audio compression does, how it affects the dynamics of sound, and how it can improve overall audio quality.

Whether you’re a seasoned pro or a newbie to audio engineering, understanding audio compression is crucial in achieving a polished and professional sound. So, let’s dive in and unlock the secrets of audio compression!

Key Takeaways

- An audio compressor is a tool used in audio engineering to control the dynamic range of sound.

- Audio compression affects the loud and soft parts of sound, evening out inconsistent volume levels and preventing distortion.

Understanding Audio Compression

In the previous section, we discussed what an audio compressor does and its importance in audio engineering. In this section, we will take a closer look at the concept of audio compression and how it works.

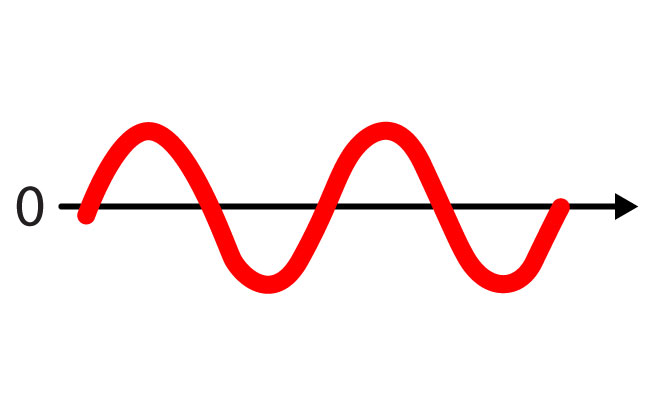

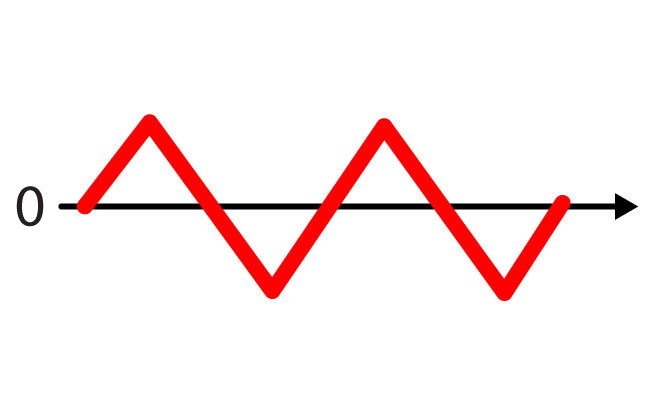

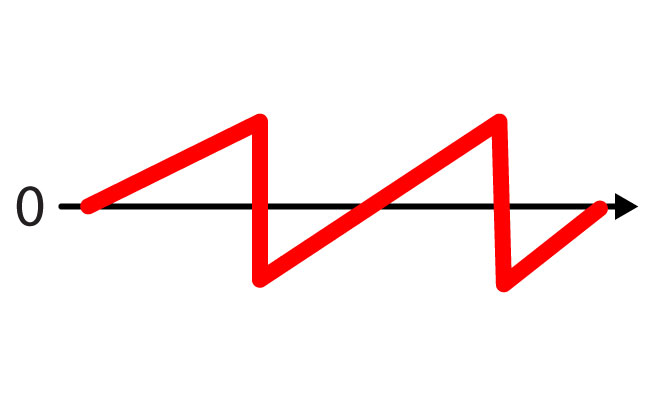

Audio compression refers to the process of reducing the dynamic range of an audio signal. Dynamic range is the difference between the loudest and softest parts of a sound. Compression helps to control the dynamic range by reducing the volume of the loudest parts of the sound and amplifying the softest parts, resulting in a more even overall volume.

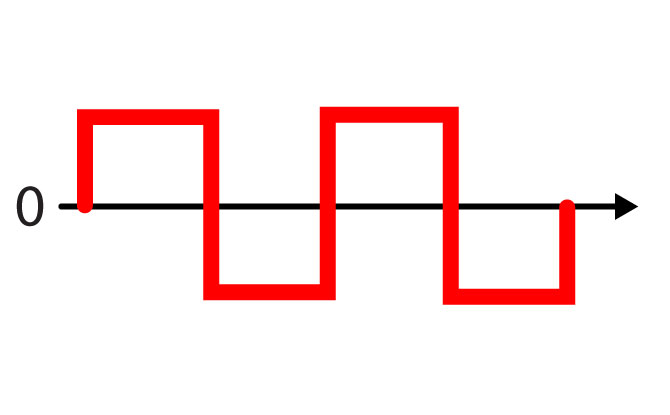

The goal of audio compression is to achieve a consistent volume level throughout the audio signal. It can also help to prevent distortion or clipping, which can occur when the volume level exceeds the maximum level that a system can handle.

This involves a two step process. Firstly, it lowers any peaks that exceed a determined threshold and then it raises up the overall gain for the signal.

To achieve these results, an audio compressor applies a series of parameters to the audio signal. These include:

| Parameter |

Function |

| Threshold |

The level at which the compressor begins to reduce the volume of the signal. |

| Ratio |

The degree to which the compressor reduces the volume above the threshold. |

| Attack |

The time it takes for the compressor to start reducing the volume once the threshold is reached. |

| Release |

The time it takes for the compressor to stop reducing the volume once the threshold is no longer exceeded. |

| Gain |

The level at which the output signal is boosted after compression. |

By adjusting these parameters, an audio engineer can tailor the compression to the specific needs of the audio signal and create a more balanced and controlled sound.

Next, we will explore the broader role of an audio compressor in the field of audio processing.

The Role of an Audio Compressor in Audio Processing

As we have seen in previous sections, an audio compressor plays a critical role in controlling the dynamics of sound. However, its usage extends far beyond this basic principle, making it an essential tool in the field of audio processing. In this section, we will explore the various stages of audio production where an audio compressor is widely used.

The first stage where an audio compressor finds its application is in the recording process. When recording music, vocals, or any other sound source, variations in loudness can occur due to the performer’s technique or the particular instrument being played. An audio compressor can help to even out these inconsistencies, allowing for a more consistent recording.

Once the recording is completed, the next phase is mixing, where the various recorded tracks are combined into a single, coherent mix. Here again, an audio compressor is an invaluable tool. It can be used to tame rogue peaks and ensure that all of the elements of the mix are well-balanced and sit together harmoniously.

The final stage of audio processing is mastering. This is the process of finalizing the mix, ensuring that it is optimized for playback across all types of devices and listening environments. At this stage, an audio compressor is used to further refine the dynamics of the mix. It can help to create a cohesive sound that is consistent throughout the track and maximize the perceived loudness of the audio while avoiding distortion or excessive noise.

Overall, an audio compressor is a vital tool in the field of audio processing, and its usage extends across the entire audio production chain. From recording to mastering, understanding how to use an audio compressor effectively is essential for achieving professional and polished sound quality.

Controlling Audio Levels with an Audio Compressor

When it comes to audio engineering, controlling audio levels is key to achieving high-quality sound. An audio compressor is an essential tool that helps to regulate the volume of audio signals and maintain a consistent level throughout a recording or a live performance.

By compressing the dynamic range (the difference between the quietest and loudest parts of a track), an audio compressor can even out volume inconsistencies and prevent distortion or clipping. This makes sure that every part of the track can be heard clearly, without any sudden spikes that could damage speakers or cause discomfort to the listener.

There are a few things to keep in mind when using an audio compressor to control audio levels. The threshold determines the volume level at which the compressor starts to kick in. The ratio determines the degree of compression applied once the threshold is crossed. The attack and release settings control how quickly the compressor reacts to changes in volume, while the makeup gain is used to compensate for any volume loss due to compression.

It’s important to strike a balance between maintaining a consistent volume level and preserving the natural dynamics of the audio signal. Overcompression can result in a flat, lifeless sound, while improper ratio settings can cause pumping or breathing artifacts that are distracting to the listener.

With proper compression techniques, an audio compressor can be a powerful tool for controlling audio levels and achieving optimal sound quality.

Different Types of Audio Compressors

Now that we understand the concept of audio compression and its role in audio processing, let’s take a closer look at the different types of audio compressors available. Understanding the differences between various compressor types is important for achieving the desired sound quality in your audio engineering projects.

One of the primary distinctions between compressors is whether they are analog or digital. Analog compressors use analog circuits and components to process audio signals, while digital compressors use software algorithms to achieve compression.

Analog compressors are known for their warmth and character, and are often used in recording studios for their vintage or classic sound. They work by modifying the voltage of the audio signal, which affects its amplitude and creates compression. Some popular analog compressors include the SSL G Series, Teletronix LA-2A, and the Fairchild 670.

Digital compressors, on the other hand, offer more precise control over the compression process. They can often achieve a very transparent, clean sound. Many DAWs (Digital Audio Workstations) come with built-in digital compressors, such as the Waves SSL G-Master Buss Compressor, FabFilter Pro-C 2, or Universal Audio LA-2A plug-in.

Another way to categorize compressors is by their basic design. There are several compressor types that are commonly used in audio engineering:

| Type |

Description |

| VCA (Voltage Controlled Amplifier) |

Uses a voltage-controlled amplifier to modify the gain of the audio signal. |

| Optical |

Uses a light source and photocell to adjust gain; often used for its smooth, musical sound. |

| FET (Field Effect Transistor) |

Similar to VCA compressors but uses a FET transistor instead of a voltage-controlled amplifier. |

| Tube |

Uses vacuum tubes to process the audio signal; often used for its warmth and vintage, analog sound. |

| Multi-band |

Divides the audio signal into separate frequency bands and applies compression to each band individually, allowing for more precise control over the sound. |

When selecting an audio compressor, it is important to consider the specific needs of the project and the desired sonic outcome. Each compressor type and model has its own strengths and weaknesses, and can be used to achieve different effects. Experimentation and experience are key to finding the right compressor for the job.

Setting Up an Audio Compressor for Optimal Performance

When it comes to setting up an audio compressor, there are several parameters that need to be considered.

It’s important to note that the optimal settings for these parameters will vary depending on the source material and the desired effect.

However, as a general rule, a slower attack time can be used to allow transients to come through, while a faster release time can help to avoid pumping and breathing artifacts.

It’s also worth considering the use of makeup gain, which can be used to compensate for the overall reduction in level caused by the compressor. However, it’s important to avoid excessive use of makeup gain, as this can lead to a distorted or unnatural sound.

Ultimately, the key to setting up an audio compressor for optimal performance is to experiment with different parameters and settings until the desired effect is achieved. Remember, there’s no one-size-fits-all approach to compression, so it’s important to take the time to find the settings that work best for your specific project.

Experimenting with different settings is the key to finding the optimal compression for your audio project.

Audio Compression Techniques for Enhancing Sound Quality

While basic compression techniques can help to control dynamic range and even out audio levels, advanced compression techniques can be employed to achieve more nuanced results.

These techniques include parallel compression, sidechain compression, and multiband compression.

Parallel compression involves blending a heavily compressed signal with an uncompressed signal to create a balance between the two. This technique is useful for achieving a thicker, more present sound without sacrificing dynamic range.

| Technique |

Description |

| Sidechain compression |

Uses an external signal source to trigger compression on the main audio signal. This technique is often used to create a rhythmic pumping effect and to give space to other elements in the mix. |

| Multiband compression |

Used to compress specific frequency bands, rather than the entire signal. This technique is useful for addressing specific balance issues and ensuring that certain frequency ranges don’t overpower others. |

While these advanced techniques can be powerful tools, they should be used judiciously and with a clear understanding of their intended effect. Overuse of compression can lead to a loss of dynamics and an overall reduction in sound quality.

Common Mistakes to Avoid When Using an Audio Compressor

Using an audio compressor can be a tricky task, even for experienced audio engineers. There are several common mistakes to avoid to ensure that your compressed sound is optimal and not compromised.

Overcompression: One of the most common mistakes is to overcompress the audio signal. This is when the dynamic range is reduced too much, resulting in a compressed and lifeless sound. To avoid this, be careful with the ratio setting and ensure that the sound remains natural and dynamic.

Improper ratio settings: Another mistake is to use improper ratios. For example, using a high ratio like 20:1 can result in excessive compression, which can be detrimental to the audio quality. It’s important to use the ratio setting creatively and adjust it according to the dynamic range of the sound.

Excessive use of makeup gain: Using too much makeup gain can lead to distortion and clipping, even when the audio signal is not overcompressed. It’s important to monitor the output level and adjust the gain in moderation.

By avoiding these common mistakes, you can achieve optimal sound quality with your audio compressor.

“It’s important to use the ratio setting creatively and adjust it according to the dynamic range of the sound.”

The Impact of Audio Compression on Different Musical Genres

Audio compression is a crucial tool in modern audio engineering, and it is widely used in various musical genres to enhance sound quality and create a distinct artistic style. The impact of audio compression on different genres can be seen in the unique characteristics and qualities that each genre exhibits.

In genres such as electronic dance music (EDM) and hip-hop, audio compression is often used to increase the perceived loudness and impact of the music. This creates a more energetic and dynamic sound that is well-suited for these high-energy genres. In contrast, genres such as classical and jazz often use compression techniques to control the dynamic range and create a more balanced and nuanced sound.

Some genres, such as rock and pop, use compression in more subtle ways, to even out inconsistencies in volume levels and prevent distortion or clipping. This can create a smoother and more polished sound that is well-suited for radio play or commercial use.

Regardless of the genre, the key to using audio compression effectively is to understand the unique requirements and characteristics of each style of music and tailor the compression techniques accordingly. This requires a deep knowledge of the specific elements that make each genre unique, such as instrumentation, arrangement, and mixing style.

With the rise of streaming services and the increasing importance of digital distribution, the impact of audio compression on different genres is more significant than ever. As such, it is essential for audio engineers and music producers to have a solid understanding of compression techniques and how to use them effectively to achieve optimal sound quality.

The Future of Audio Compression

As technology continues to evolve, so do the methods and tools used in audio processing. The future of audio compression looks promising with the emergence of new techniques and technologies that aim to improve upon traditional methods.

One area of advancement is in the use of artificial intelligence and machine learning algorithms for audio processing. These technologies can be used to analyze audio tracks and dynamically adjust compression parameters in real-time, resulting in more precise and effective compression.

Another promising area is the use of spatial audio compression, which takes into account the positioning of sound sources in a 3D space. This allows for more accurate and natural-sounding compression, particularly in immersive audio formats like virtual reality.

Finally, there is a growing trend towards implementing more transparent and subtle forms of compression, as opposed to heavy-handed techniques that can result in unnatural and overprocessed sounds. This shift is likely to continue as audio professionals aim for maximum fidelity and authenticity in their productions.

As technology continues to advance, we can expect to see even more sophisticated and effective methods for achieving optimal sound quality in audio production.

Conclusion

In this comprehensive article, we have unlocked the secrets of audio compression and explored its essential role in audio engineering. We have discovered how compression helps to control dynamic range and maintain a balanced sound, and how it is used across the various stages of audio production.

We have examined the different types of audio compressors available and provided practical tips on how to set them up for optimal performance. We have also explored advanced compression techniques and discussed common mistakes to avoid.

Looking to the future, we have discussed emerging trends and technologies in audio compression and how they may impact the field. And lastly, we have examined the specific requirements and characteristics of different musical genres and how compression techniques can be tailored to their unique needs.

By understanding and utilizing audio compression effectively, we can achieve optimal sound quality in our audio productions. So let’s continue to explore and experiment with this powerful tool to unlock the full potential of our sound.

FAQ

Q: What does an audio compressor do?

A: An audio compressor is a device or software that helps control the dynamic range of audio signals. It reduces the difference between the loudest and softest parts of a sound, resulting in a more balanced and consistent audio output.

Q: How does audio compression work?

A: Audio compression works by automatically adjusting the level of a signal based on certain parameters. When the signal exceeds a specified threshold, the compressor reduces its volume by a predetermined ratio. This helps to prevent peaks and excessive volume fluctuations.

Q: What is the role of an audio compressor in audio processing?

A: An audio compressor plays a crucial role in various stages of audio processing, including recording, mixing, and mastering. It helps to control audio levels, enhance sound quality, and ensure a more professional and polished audio output.

Q: How does an audio compressor control audio levels?

A: An audio compressor controls audio levels by reducing the volume of signals that exceed a certain threshold. This helps to prevent distortion, clipping, and inconsistent volume levels, resulting in a more pleasing and balanced sound.

Q: What are the different types of audio compressors?

A: There are various types of audio compressors, including analog and digital compressors. They differ in terms of their circuitry, sound characteristics, and control options. Some popular compressor models include the SSL G Series, Universal Audio LA-2A, and Waves API 2500.

Q: How do I set up an audio compressor for optimal performance?

A: To set up an audio compressor for optimal performance, you need to adjust parameters such as threshold, ratio, attack, release, and gain. These settings can vary depending on the audio source and desired outcome. Experimentation and careful listening are key to achieving the best results.

Q: What are some advanced audio compression techniques?

A: Advanced audio compression techniques include parallel compression, sidechain compression, and multiband compression. These techniques offer more precise control over different frequency ranges and can be used to enhance specific elements of a sound.

Q: What are some common mistakes to avoid when using an audio compressor?

A: Common mistakes to avoid when using an audio compressor include overcompression, improper ratio settings, and excessive use of makeup gain. It’s important to strike a balance and use compression sparingly and purposefully to avoid negative effects on the audio quality.

Q: How does audio compression impact different musical genres?

A: Audio compression is utilized differently across various musical genres based on their specific requirements and characteristics. Different genres may require different compression techniques to preserve the dynamics or achieve a more compressed and upfront sound.